Massive parallelism:Exploit massive parallelism in the consideration of multiple interpretations and hypotheses.

The system we have built and are continuing to develop, called DeepQA, is a massively parallel probabilistic evidence-based architecture. For theJeopardyChallenge, we use more than 100 different techniques for analyzing natural language, identifying sources, finding and generating hypotheses, finding and scoring evidence, and merging and ranking hypotheses. What is far more important than any particular technique we use is how we combine them in DeepQA such that overlapping approaches can bring their strengths to bear and contribute to improvements in accuracy, confidence, or speed.

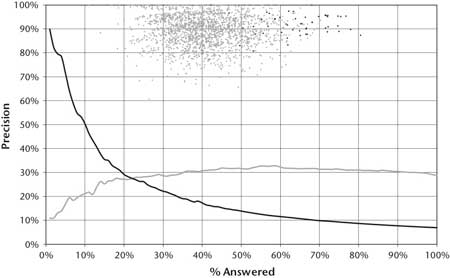

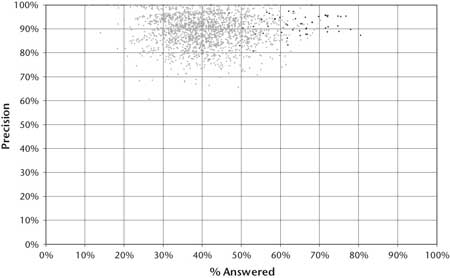

The darker dots on the graph represent Ken Jenningss games. Ken Jennings had an unequaled winning streak in 2004, in which he won 74 games in a row. Based on our analysis of those games, he acquired on average 62 percent of the questions and answered with 92 percent precision. Human performance at this task sets a very high bar for precision, confidence, speed, and breadth.

Meeting theJeopardyChallenge requires advancing and incorporating a variety of QA technologies including parsing, question classification, question decomposition, automatic source acquisition and evaluation, entity and relation detection, logical form generation, and knowledge representation and reasoning.

As our results dramatically improved, we observed that system-level advances allowing rapid integration and evaluation of new ideas and new components against end-to-end metrics were essential to our progress. This was echoed at the OAQA workshop for experts with decades of investment in QA, hosted by IBM in early 2008. Among the workshop conclusions was that QA would benefit from the collaborative evolution of a single extensible architecture that would allow component results to be consistently evaluated in a common technical context against a growing variety of what were called Challenge Problems. Different challenge problems were identified to address various dimensions of the general QA problem.Jeopardywas described as one addressing dimensions including high precision, accurate confidence determination, complex language, breadth of domain, and speed.

Some more complex clues contain multiple facts about the answer, all of which are required to arrive at the correct response but are unlikely to occur together in one place. For example:

In contrast to the system evaluation shown in figure 2, which can display a curve over a range of confidence thresholds, the human performance shows only a single point per game based on the observed precision and percent answered the winner demonstrated in the game. A further distinction is that in these historical games the human contestants did not have the liberty to answer all questions they wished. Rather the percent answered consists of those questions for which the winner was confident and fast enough to beat the competition to the buzz. The system performance graphs shown in this paper are focused on evaluating QA performance, and so do not take into account competition for the buzz. Human performance helps to position our systems performance, but obviously, in aJeopardygame, performance will be affected by competition for the buzz and this will depend in large part on how quickly a player can compute an accurate confidence and how the player manages risk.

A 30-clueJeopardyboard is organized into six columns. Each column contains five clues and is associated with a category. Categories range from broad subject headings like history, science, or politics to less informative puns like tutu much, in which the clues are about ballet, to actual parts of the clue, like who appointed me to the Supreme Court? where the clue is the name of a judge, to anything goes categories like potpourri. Clearly some categories are essential to understanding the clue, some are helpful but not necessary, and some may be useless, if not misleading, for a computer.

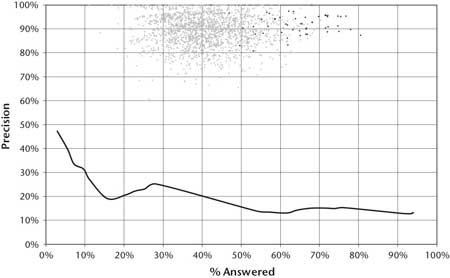

The PIQUANT and OpenEphyra baselines demonstrate the performance of state-of-the-art QA systems on theJeopardytask. In figure 5 we show two other baselines that demonstrate the performance of two complementary approaches on this task. The light gray line shows the performance of a system based purely on text search, using terms in the question as queries and search engine scores as confidences for candidate answers generated from retrieved document titles. The black line shows the performance of a system based on structured data, which attempts to look the answer up in a database by simply finding the named entities in the database related to the named entities in the clue. These two approaches were adapted to theJeopardytask, including identifying and integrating relevant content.

Clue:Though it sounds harsh, its just embroidery, often in a floral pattern, done with yarn on cotton cloth.

The overarching principles in DeepQA are massive parallelism, many experts, pervasive confidence estimation, and integration of shallow and deep knowledge.

Confidence estimation was very critical to shaping our overall approach in DeepQA. There is no expectation that any component in the system does a perfect joball components post features of the computation and associated confidences, and we use a hierarchical machine-learning method to combine all these features and decide whether or not there is enough confidence in the final answer to attempt to buzz in and risk getting the question wrong.

Another class of decomposable questions is one in which a subclue is nested in the outer clue, and the subclue can be replaced with its answer to form a new question that can more easily be answered. For example:

Our metrics and baselines are intended to give us confidence that new methods and algorithms are improving the system or to inform us when they are not so that we can adjust research priorities.

Clue:Of the four countries in the world that the United States does not have diplomatic relations with, the one thats farthest north.

The questions used were 500 randomly sampledJeopardyclues from episodes in the past 15 years. The corpus that was used contained, but did not necessarily justify, answers to more than 90 percent of the questions. The result of the PIQUANT baseline experiment is illustrated in figure 4. As shown, on the 5 percent of the clues that PIQUANT was most confident in (left end of the curve), it delivered 47 percent precision, and over all the clues in the set (right end of the curve), its precision was 13 percent. Clearly the precision and confidence estimation are far below the requirements of theJeopardyChallenge.

Answer: A Hard Days Night of the Living Dead

About 12 percent of the clues do not indicate an explicit lexical answer type but may refer to the answer with pronouns like it, these, or this or not refer to it at all. In these cases the type of answer must be inferred by the context. Heres an example:

Special instruction questions are those that are not self-explanatory but rather require a verbal explanation describing how the question should be interpreted and solved. For example:

Jeopardyalso has categories of questions that require special processing defined by the category itself. Some of them recur often enough that contestants know what they mean without instruction; for others, part of the task is to figure out what the puzzle is as the clues and answers are revealed (categories requiring explanation by the host are not part of the challenge). Examples of well-known puzzle categories are the Before and After category, where two subclues have answers that overlap by (typically) one word, and the Rhyme Time category, where the two subclue answers must rhyme with one another. Clearly these cases also require question decomposition. For example:

In addition to question-answering precision, the systems game-winning performance will depend on speed, confidence estimation, clue selection, and betting strategy. Ultimately the outcome of the public contest will be decided based on whether or not Watson can win one or two games against top-ranked humans in real time. The highest amount of money earned by the end of a one- or two-game match determines the winner. A players final earnings, however, often will not reflect how well the player did during the game at the QA task. This is because a player may decide to bet big on Daily Double or FinalJeopardyquestions. There are three hidden Daily Double questions in a game that can affect only the player lucky enough to find them, and one FinalJeopardyquestion at the end that all players must gamble on. Daily Double and FinalJeopardyquestions represent significant events where players may risk all their current earnings. While potentially compelling for a public contest, a small number of games does not represent statistically meaningful results for the systems raw QA performance.

In this section we elaborate on the various aspects of theJeopardyChallenge.

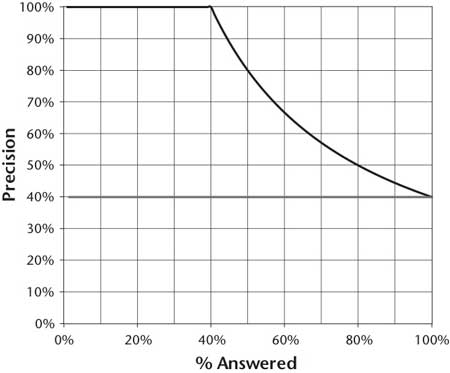

Perfect confidence estimation (upper line) and no confidence estimation (lower line).

Figure 2 shows a plot of precision versus percent attempted curves for two theoretical systems. It is obtained by evaluating the two systems over a range of confidence thresholds. Both systems have 40 percent accuracy, meaning they get 40 percent of all questions correct. They differ only in their confidence estimation. The upper line represents an ideal system with perfect confidence estimation. Such a system would identify exactly which questions it gets right and wrong and give higher confidence to those it got right. As can be seen in the graph, if such a system were to answer the 50 percent of questions it had highest confidence for, it would get 80 percent of those correct. We refer to this level of performance as 80 percent precision at 50 percent answered. The lower line represents a system without meaningful confidence estimation. Since it cannot distinguish between which questions it is more or less likely to get correct, its precision is constant for all percent attempted. Developing more accurate confidence estimation means a system can deliver far higher precision even with the same overall accuracy.

Figure 2. Precision Versus Percentage Attempted.

Outer subclue:Of Bhutan, Cuba, Iran, and North Korea, the one thats farthest north.

Finally, theJeopardyChallenge represents a unique and compelling AI question similar to the one underlying DeepBlue (Hsu 2002)can a computer system be designed to compete against the best humans at a task thought to require high levels of human intelligence, and if so, what kind of technology, algorithms, and engineering is required? While we believe theJeopardyChallenge is an extraordinarily demanding task that will greatly advance the field, we appreciate that this challenge alone does not address all aspects of QA and does not by any means close the book on the QA challenge the way that Deep Blue may have for playing chess.

Figure 5. Text Search Versus Knowledge Base Search.

Clue:Invented in the 1500s to speed up the game, this maneuver involves two pieces of the same color.

In this case, we would not expect to find both subclues in one sentence in our sources; rather, if we decompose the question into these two parts and ask for answers to each one, we may find that the answer common to both questions is the answer to the original clue.

includes an article on Building Watson: An Overview of the DeepQA Project, written by the IBM Watson Research Team, led by David Ferucci. Read about this exciting project in the most detailed technical article available. We hope you will also take a moment to read through thearchives ofand consider joining us at AAAI. To join, please read more at The most recent online volume ofis usually only available to members of the association. However, we have made an exception for this special article on Watson to share the excitement. Congratulations to the IBM Watson Team!

While Watson is equipped with betting strategies necessary for playing fullJeopardy, from a core QA perspective we want to measure correctness, confidence, and speed, without considering clue selection, luck of the draw, and betting strategies. We measure correctness and confidence using precision and percent answered. Precision measures the percentage of questions the system gets right out of those it chooses to answer. Percent answered is the percentage of questions it chooses to answer (correctly or incorrectly). The system chooses which questions to answer based on an estimated confidence score: for a given threshold, the system will answer all questions with confidence scores above that threshold. The threshold controls the trade-off between precision and percent answered, assuming reasonable confidence estimation. For higher thresholds the system will be more conservative, answering fewer questions with higher precision. For lower thresholds, it will be more aggressive, answering more questions with lower precision. Accuracy refers to the precision if all questions are answered.

A recurring theme in our approach is the requirement to try many alternate hypotheses in varying contexts to see which produces the most confident answers given a broad range of loosely coupled scoring algorithms. Leveraging category information is another clear area requiring this approach.

Jeopardy!is a well-known TV quiz show that has been airing on television in the United States for more than 25 years (see theJeopardy!Quiz Show sidebar for more information on the show). It pits three human contestants against one another in a competition that requires answering rich natural language questions over a very broad domain of topics, with penalties for wrong answers. The nature of the three-person competition is such that confidence, precision, and answering speed are of critical importance, with roughly 3 seconds to answer each question. A computer system that could compete at human champion levels at this game would need to produce exact answers to often complex natural language questions with high precision and speed and have a reliable confidence in its answers, such that it could answer roughly 70 percent of the questions asked with greater than 80 percent precision in 3 seconds or less.

Category:Before and After Goes to the Movies

Subclue 2:Film of a typical day in the life of the Beatles.

Subclue 2:Running from bloodthirsty zombie fans in a Romero classic.

An initial 4-week effort was made to adapt PIQUANT to theJeopardyChallenge. The experiment focused on precision and confidence. It ignored issues of answering speed and aspects of the game like betting and clue values.

DeepQA is an architecture with an accompanying methodology, but it is not specific to theJeopardyChallenge. We have successfully applied DeepQA to both theJeopardyand TREC QA task. We have begun adapting it to different business applications and additional exploratory challenge problems including medicine, enterprise search, and gaming.

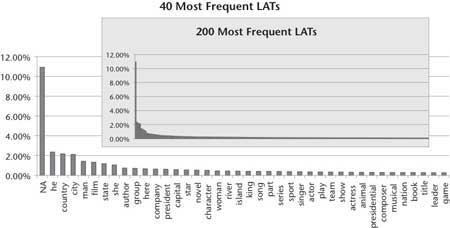

Figure 1. Lexical Answer Type Frequency.

Verbal instruction from host:Were going to give you a word comprising two postal abbreviations; you have to identify the states.

A compelling and scientifically appealing aspect of theJeopardyChallenge is the human reference point. Figure 3 contains a graph that illustrates expert human performance onJeopardyIt is based on our analysis of nearly 2000 historicalJeopardygames. Each point on the graph represents the performance of the winner in oneJeopardygame.2As in figure 2, thex-axis of the graph, labeled % Answered, represents the percentage of questions the winner answered, and they-axis of the graph, labeled Precision, represents the percentage of those questions the winner answered correctly.

Clue:Theyre the two states you could be reentering if youre crossing Floridas northern border.

The distribution of LATs has a very long tail, as shown in figure 1. We found 2500 distinct and explicit LATs in the 20,000 question sample. The most frequent 200 explicit LATs cover less than 50 percent of the data. Figure 1 shows the relative frequency of the LATs. It labels all the clues with no explicit type with the label NA. This aspect of the challenge implies that while task-specific type systems or manually curated data would have some impact if focused on the head of the LAT curve, it still leaves more than half the problems unaccounted for. Our clear technical bias for both business and scientific motivations is to create general-purpose, reusable natural language processing (NLP) and knowledge representation and reasoning (KRR) technology that can exploit as-is natural language resources and as-is structured knowledge rather than to curate task-specific knowledge resources.

Both present very interesting challenges from an AI perspective but were put out of scope for this contest and evaluation.

(Contestants are shown a picture of a B-52 bomber)

Clue:Secretary Chase just submitted this to me for the third time; guess what, pal. This time Im accepting it.

Clue:Film of a typical day in the life of the Beatles, which includes running from bloodthirsty zombie fans in a Romero classic.

Written byDavid Ferrucci, Eric Brown, Jennifer Chu-Carroll, James Fan, David Gondek, Aditya A. Kalyanpur, Adam Lally, J. William Murdock, Eric Nyberg, John Prager, Nico Schlaefer, and Chris Welty

Clue:When hit by electrons, a phosphor gives off electromagnetic energy in this form.

There are many infrequent types of puzzle categories including things like converting roman numerals, solving math word problems, sounds like, finding which word in a set has the highest Scrabble score, homonyms and heteronyms, and so on. Puzzles constitute only about 23 percent of all clues, but since they typically occur as entire categories (five at a time) they cannot be ignored for success in the Challenge as getting them all wrong often means losing a game.

The center of what we call the Winners Cloud (the set of light gray dots in the graph in figures 3 and 4) reveals thatJeopardychampions are confident and fast enough to acquire on average between 40 percent and 50 percent of all the questions from their competitors and to perform with between 85 percent and 95 percent precision.

Subclue 1:This archaic term for a mischievous or annoying child.

A similar baseline experiment was performed in collaboration with Carnegie Mellon University (CMU) using OpenEphyra,5an open-source QA framework developed primarily at CMU. The framework is based on the Ephyra system, which was designed for answering TREC questions. In our experiments on TREC 2002 data, OpenEphyra answered 45 percent of the questions correctly using a live web search.

Winning atJeopardyrequires accurately computing confidence in your answers. The questions and content are ambiguous and noisy and none of the individual algorithms are perfect. Therefore, each component must produce a confidence in its output, and individual component confidences must be combined to compute the overall confidence of the final answer. The final confidence is used to determine whether the computer system should risk choosing to answer at all. InJeopardyparlance, this confidence is used to determine whether the computer will ring in or buzz in for a question. The confidence must be computed during the time the question is read and before the opportunity to buzz in. This is roughly between 1 and 6 seconds with an average around 3 seconds.

There are a wide variety of ways one can attempt to characterize theJeopardyclues. For example, by topic, by difficulty, by grammatical construction, by answer type, and so on. A type of classification that turned out to be useful for us was based on the primary method deployed to solve the clue. The bulk ofJeopardyclues represent what we would consider factoid questionsquestions whose answers are based on factual information about one or more individual entities. The questions themselves present challenges in determining what exactly is being asked for and which elements of the clue are relevant in determining the answer. Here are just a few examples (note that while theJeopardy!game requires that answers are delivered in the form of a question (see theJeopardy!Quiz Show sidebar), this transformation is trivial and for purposes of this paper we will just show the answers themselves):

IBM Research undertook a challenge to build a computer system that could compete at the human champion level in real time on the American TV quiz show, Jeopardy. The extent of the challenge includes fielding a real-time automatic contestant on the show, not merely a laboratory exercise. The Jeopardy Challenge helped us address requirements that led to the design of the DeepQA architecture and the implementation of Watson. After three years of intense research and development by a core team of about 20 researchers, Watson is performing at human expert levels in terms of precision, confidence, and speed at the Jeopardy quiz show. Our results strongly suggest that DeepQA is an effective and extensible architecture that can be used as a foundation for combining, deploying, evaluating, and advancing a wide range of algorithmic techniques to rapidly advance the field of question answering (QA).

As a measure of theJeopardyChallenges breadth of domain, we analyzed a random sample of 20,000 questions extracting the lexical answer type (LAT) when present. We define a LAT to be a word in the clue that indicates the type of the answer, independent of assigning semantics to that word. For example in the following clue, the LAT is the string maneuver.

Early on in the project, attempts to adapt PIQUANT (Chu-Carroll et al. 2003) failed to produce promising results. We devoted many months of effort to encoding algorithms from the literature. Our investigations ran the gamut from deep logical form analysis to shallow machine-translation-based approaches. We integrated them into the standard QA pipeline that went from question analysis and answer type determination to search and then answer selection. It was difficult, however, to find examples of how published research results could be taken out of their original context and effectively replicated and integrated into different end-to-end systems to produce comparable results. Our efforts failed to have significant impact onJeopardyor even on prior baseline studies using TREC data.

Clue:This archaic term for a mischievous or annoying child can also mean a rogue or scamp.

Subclue 2:This term can also mean a rogue or scamp.

With QA in mind, we settled on a challenge to build a computer system, calledWatson,1which could compete at the human champion level in real time on the American TV quiz show,Jeopardy.The extent of the challenge includes fielding a real-time automatic contestant on the show, not merely a laboratory exercise.

Clue:Alphanumeric name of the fearsome machine seen here.

Figure 3. Champion Human Performance at Jeopardy.

With a wealth of enterprise-critical information being captured in natural language documentation of all forms, the problems with perusing only the top 10 or 20 most popular documents containing the users two or three key words are becoming increasingly apparent. This is especially the case in the enterprise where popularity is not as important an indicator of relevance and where recall can be as critical as precision. There is growing interest to have enterprise computer systems deeply analyze the breadth of relevant content to more precisely answer and justify answers to users natural language questions. We believe advances in question-answering (QA) technology can help support professionals in critical and timely decision making in areas like compliance, health care, business integrity, business intelligence, knowledge discovery, enterprise knowledge management, security, and customer support. For researchers, the open-domain QA problem is attractive as it is one of the most challenging in the realm of computer science and artificial intelligence, requiring a synthesis of information retrieval, natural language processing, knowledge representation and reasoning, machine learning, and computer-human interfaces. It has had a long history (Simmons 1970) and saw rapid advancement spurred by system building, experimentation, and government funding in the past decade (Maybury 2004, Strzalkowski and Harabagiu 2006).

Our most obvious baseline is the QA system called Practical Intelligent Question Answering Technology (PIQUANT) (Prager, Chu-Carroll, and Czuba 2004), which had been under development at IBM Research by a four-person team for 6 years prior to taking on theJeopardyChallenge. At the time it was among the top three to five Text Retrieval Conference (TREC) QA systems. Developed in part under the U.S. government AQUAINT program3and in collaboration with external teams and universities, PIQUANT was a classic QA pipeline with state-of-the-art techniques aimed largely at the TREC QA evaluation (Voorhees and Dang 2005). PIQUANT performed in the 33 percent accuracy range in TREC evaluations. While the TREC QA evaluation allowed the use of the web, PIQUANT focused on question answering using local resources. A requirement of theJeopardyChallenge is that the system be self-contained and does not link to live web search.

The results form an interesting comparison. The search-based system has better performance at 100 percent answered, suggesting that the natural language content and the shallow text search techniques delivered better coverage. However, the flatness of the curve indicates the lack of accurate confidence estimation.6The structured approach had better informed confidence when it was able to decipher the entities in the question and found the right matches in its structured knowledge bases, but its coverage quickly drops off when asked to answer more questions. To be a high-performing question-answering system, DeepQA must demonstrate both these properties to achieve high precision, high recall, and an accurate confidence estimation.

The goals of IBM Research are to advance computer science by exploring new ways for computer technology to affect science, business, and society. Roughly three years ago, IBM Research was looking for a major research challenge to rival the scientific and popular interest of Deep Blue, the computer chess-playing champion (Hsu 2002), that also would have clear relevance to IBM business interests.

We spent minimal effort adapting OpenEphyra, but like PIQUANT, its performance onJeopardyclues was below 15 percent accuracy. OpenEphyra did not produce reliable confidence estimates and thus could not effectively choose to answer questions with higher confidence. Clearly a larger investment in tuning and adapting these baseline systems toJeopardywould improve their performance; however, we limited this investment since we did not want the baseline systems to become significant efforts.

Many experts:Facilitate the integration, application, and contextual evaluation of a wide range of loosely coupled probabilistic question and

Clue:Its where Pele stores his ball.

DecomposableJeopardyclues generated requirements that drove the design of DeepQA to generate zero or more decomposition hypotheses for each question as possible interpretations.

Subclue 2:where store (cabinet, drawer, locker, and so on)

Inner subclue:The four countries in the world that the United States does not have diplomatic relations with (Bhutan, Cuba, Iran, North Korea).

We ended up overhauling nearly everything we did, including our basic technical approach, the underlying architecture, metrics, evaluation protocols, engineering practices, and even how we worked together as a team. We also, in cooperation with CMU, began the Open Advancement of Question Answering (OAQA) initiative. OAQA is intended to directly engage researchers in the community to help replicate and reuse research results and to identify how to more rapidly advance the state of the art in QA (Ferrucci et al 2009).

Published inAI MagazineFall, 2010. Copyright ©2010 AAAI. All rights reserved.

TheJeopardyquiz show ordinarily admits two kinds of questions that IBM and Jeopardy Productions, Inc., agreed to exclude from the computer contest: audiovisual (A/V) questions and Special Instructions questions. A/V questions require listening to or watching some sort of audio, image, or video segment to determine a correct answer. For example:

The requirements of the TREC QA evaluation were different than for theJeopardychallenge. Most notably, TREC participants were given a relatively small corpus (1M documents) from which answers to questions must be justified; TREC questions were in a much simpler form compared toJeopardyquestions, and the confidences associated with answers were not a primary metric. Furthermore, the systems are allowed to access the web and had a week to produce results for 500 questions. The reader can find details in the TREC proceedings4and numerous follow-on publications.