Face recognition is invalid for animation, Disney creates a special face recognition library for animation.

Face recognition has also encountered a pit, and it can recognize the three-dimensional, but it is invalid for the two-dimensional. Disney’s technical team is developing this algorithm to help animators conduct post-search searches. The team used PyTorch and its efficiency was greatly improved.

Face recognition is invalid for animation

Speaking of animation, we have to mention Disney, the commercial empire established in 1923. Disney, which started with animation, has led the development of global animation movies.

Behind every animated film, the hard work and sweat of hundreds of people have condensed. Since the release of the first computerized 3D animation “Toy Story”, Disney started the journey of digital animation creation. With the development of CGI and AI technology, the production and archiving methods of Disney animated films have also undergone great changes.

“ZooCrazy”, a global hit, was completed in five years

At present, Disney has also absorbed a large number of computer scientists, who are using the most cutting-edge technology to change the way content is created to reduce the burden on the filmmakers behind the scenes.

How to manage digital content for a century-old movie giant

It is understood that in the Walt Disney Animation Studios, there are approximately 800 employees from 25 different countries, including artists, directors, screenwriters, producers and technical teams.

To make a movie, you need to go through many complicated processes from inspiration, to story outline writing, to script drafting, art design, character design, dubbing, animation effects, special effects production, editing, and post-production.

As of March 2021, only the Walt Disney Animation Studios, which specializes in the production of animated films, has produced and released 59 feature-length animations. The animation images in these films add up to hundreds of thousands.

Related material data of historical animated characters will be used frequently in sequels, easter eggs, and reference designs

When an animator is making a sequel or wanting to refer to a certain character, he needs to search for a specific character, scene or object in the massive content archive. For this reason, they often need to spend several hours to watch the video, purely relying on the naked eye to screen the clips they need.

In order to solve this problem, Disney has started an AI project called “Content Genome” since 2016, which aims to create Disney digital content files to help animators quickly and accurately identify faces in animation (Regardless of whether it is a character or an object).

Training animation special face recognition algorithm

The first step in the digital content library is to detect and mark the content in the past works to make it easier for producers and users to search.

Face recognition technology is relatively mature, but can the same set of methods be used for facial recognition in animation?

After the Content Genome technical team conducted experiments, they found that it was only feasible in certain situations.

They selected two animated film works, “Elena of Avalor” and “Guardian of the Little Lion King”, manually annotated some samples, and marked the faces in hundreds of frames with squares. Through this manually annotated data set, the team verified that the face recognition technology based on the HOG + SVM pipeline does not perform well in animated faces (especially human faces and animal faces). Face recognition is invalid for animation.

Manually mark the face of the animated image. Face recognition is invalid for animation.

The team confirmed after analysis that methods like HOG + SVM are robust to color, brightness or texture changes, but the model used can only match animated characters with human scale (ie two eyes, one nose and one mouth) ). Face recognition is invalid for animation.

In addition, because the background of animation content usually has flat areas and few details, the Faster-RCNN model will mistakenly regard everything that stands out under a simple background as animated faces.

In “Cars”, the relatively abstract faces of the two protagonists of the “racing car” cannot be detected and recognized by traditional facial recognition technology.

Therefore, the team believes that they need a technology that can learn more abstract face concepts. Face recognition is invalid for animation.

The team chose to use PyTorch to train the model. The team introduced that through PyTorch, they can access the most advanced pre-trained models to meet their training needs and make the archiving process more efficient.

During the training process, the team found that in their data set, the positive samples were enough, but there were not enough negative samples to train the model. They decided to use other images that did not contain animated faces but had animated features to increase the initial data set.

To do this technically, they extended Torchvision’s Faster-RCNN implementation to allow negative samples to be loaded during training without the need for annotations. Face recognition is invalid for animation.

This is also a new feature that the team has made for Torchvision 0.6 under the guidance of the core developers of Torchvision. Adding negative sample examples to the data set can greatly reduce false positives during inference, resulting in excellent results.

Use PyTorch to process video, increase the efficiency by 10 times

After realizing the facial recognition of animated images, the team’s next goal is to speed up the video analysis process, and the application of PyTorch can effectively parallelize and accelerate other tasks.

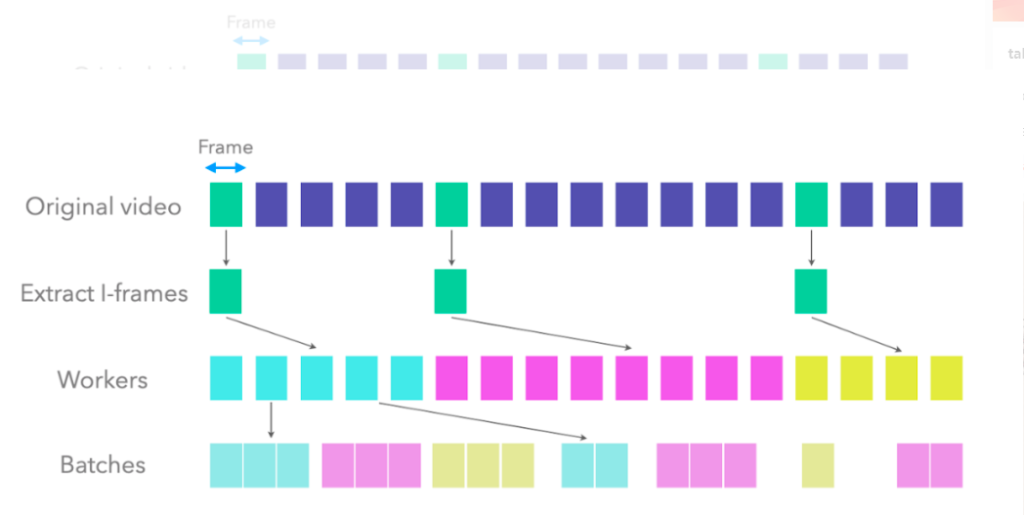

The team introduced that reading and decoding video is also time-consuming, so the team used a custom PyTorch IterableDataset, combined with PyTorch’s DataLoader, allowing parallel CPUs to read different parts of the video.

The I-frames from which the video is extracted are divided into different chunks, and each CPU worker reads different chunks

This way of reading video is already very fast, but the team also tried to complete all calculations with only one reading. Therefore, they implemented most of the pipeline in PyTorch and considered GPU execution. Each frame is sent to the GPU only once, and then all algorithms are applied to each batch, reducing the communication between the CPU and the GPU to a minimum.

The team also uses PyTorch to implement more traditional algorithms, such as a shot detector, which does not use neural networks and mainly performs operations such as color space changes, histograms, and singular value decomposition (SVD). PyTorch enables the team to transfer calculations to the GPU with minimal cost and easily recover intermediate results shared between multiple algorithms.

By using PyTorch, the team transferred the CPU part to the GPU, and used DataLoader to accelerate video reading, making full use of the hardware, and ultimately shortening the processing time by 10 times.

The developers of the team concluded that the core components of PyTorch, such as IterableDataset, DataLoader and Torchvision, all enable the team to improve data loading and algorithm efficiency in the production environment. From reasoning to model training resources to a complete pipeline optimization tool set, the team has More and more choose to use PyTorch.