CPU 100% .NET performance analysis of HIS system, This is a case of performing performance analysis using the his system developed by .net in a production environment when the CPU is 100%. The post shows how to use windbg to find the performance bottleneck of the .net program. The original source is a post from cnblogs. If you can read Chinese, you can find its link at the bottom of the article.

.NET performance analysis

1. Tell a story

A few days ago, a friend added group and complained that his program is always inexplicably high cpu during the peak period. How to analyze it for help?

This morning, the CPU 100% appeared again, and the system could not log in.

After communicating with this friend, it is said that this problem has plagued them for several years, and I also invited a Microsoft engineer to come and solve it. . .

Regarding the problem of high CPU burst, old readers should know that I have written several articles, which are basically divided into two categories:

- GC trigger

- Large number of locks

A small part of it is all kinds of inexplicable problems, I can’t list them all ???, now that my friend has found me, I have to find a way to solve it for him, don’t talk too much, go to windbg.

Two: windbg analysis

1. View the synchronization block table

Encounter this problem, first check Sync block table is my inertia of thinking, the command is very simple !syncblk.

0:000> !syncblk Index SyncBlock MonitorHeld Recursion Owning Thread Info SyncBlock Owner ----------------------------- Total 20779 CCW 16 RCW 21 ComClassFactory 0 Free 16490

.NET performance analysis

I went and threw an empty one, and there was nothing in the sync block table. . . Since it has nothing to do with locks, let’s take a look at threads. After all, threads are supported by the CPU.

2. View threads

To view the system Managed thread , you can use the !tcommand, thread more, a little bit simplified.

0:000> !t

ThreadCount: 135

UnstartedThread: 0

BackgroundThread: 132

PendingThread: 0

DeadThread: 1

Hosted Runtime: no

Lock

ID OSID ThreadOBJ State GC Mode GC Alloc Context Domain Count Apt Exception

34 1 25d4 000001ea28702130 28220 Preemptive 0000000000000000:0000000000000000 000001ea286ee080 0 Ukn

74 2 3ed0 000001ea286fa940 2b220 Preemptive 0000000000000000:0000000000000000 000001ea286ee080 0 MTA (Finalizer)

76 3 4a70 000001f4447d7810 102a220 Preemptive 0000000000000000:0000000000000000 000001ea286ee080 0 MTA (Threadpool Worker)

77 4 326c 000001f4447dbe60 21220 Preemptive 0000000000000000:0000000000000000 000001ea286ee080 0 Ukn

78 6 2dac 000001f4447d9750 1020220 Preemptive 0000000000000000:0000000000000000 000001ea286ee080 0 Ukn (Threadpool Worker)

79 7 1468 000001f444a2d6f0 21220 Preemptive 0000000000000000:0000000000000000 000001ea286ee080 1 Ukn (GC)

80 8 f0 000001f444a2cf20 21220 Preemptive 0000000000000000:0000000000000000 000001ea286ee080 0 Ukn

81 9 3118 000001f444a2f630 21220 Preemptive 0000000000000000:0000000000000000 000001ea286ee080 0 Ukn

.NET performance analysis

Let’s sell it first, can any friends see what’s so strange about these threads? ? ? Yes, the thread 79last one of a Ukn (GC)mark, I’m sure you wonder, does that indicate? As the underlying GC model some changes, but anyway, it tells you a certain extent, your program triggered GC, in order to further verify, can !t -speciallook 79 threads in the end what belongs to the category as well as more detailed information.

0:000> !t -special

ThreadCount: 135

UnstartedThread: 0

BackgroundThread: 132

PendingThread: 0

DeadThread: 1

Hosted Runtime: no

Lock

ID OSID ThreadOBJ State GC Mode GC Alloc Context Domain Count Apt Exception

34 1 25d4 000001ea28702130 28220 Preemptive 0000000000000000:0000000000000000 000001ea286ee080 0 Ukn

79 7 1468 000001f444a2d6f0 21220 Preemptive 0000000000000000:0000000000000000 000001ea286ee080 1 Ukn (GC)

OSID Special thread type

41 38d8 DbgHelper

42 1c88 GC

74 3ed0 Finalizer

75 402c ProfilingAPIAttach

76 4a70 Timer

79 1468 GC SuspendEE

.NET performance analysis

We can see from the last line of output, the GC thread is 79, and there are a strange SuspendEEmark, you’re curious, which in turn do you mean?

SuspendEE=Suspend CLR Execution Engine (EE)

In other words, the thread 79 CLR Execution engine to freeze the purpose is very simple, is to facilitate the other 31 GC threads to take care of the present Managed heap, but this is really my brother machine ??, 32core, do not know which hospital so to force, supplement look, with !cpuidvalidation.

0:000> !cpuid CP F/M/S Manufacturer MHz 0 6,62,4 <unavailable> 2600 1 6,62,4 <unavailable> 2600 2 6,62,4 <unavailable> 2600 3 6,62,4 <unavailable> 2600 xxx 31 6,62,4 <unavailable> 2600

.NET performance analysis

Now that the GC trigger is predicted, the managed and unmanaged stacks of all threads can be called out in the next step.

3. View each thread stack

To see the individual threads of managed and unmanaged stack is simple, use !eestackcan then be retrieved keyword WaitUntilGCComplete to determine how many threads are waiting GC processed.

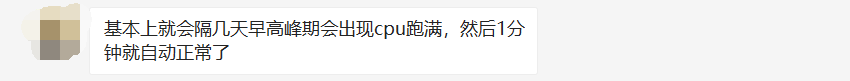

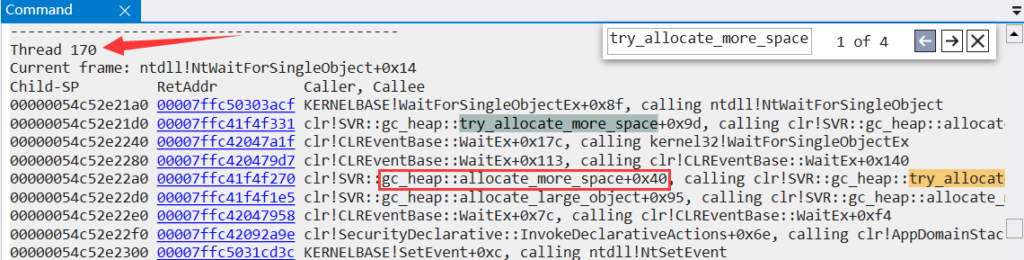

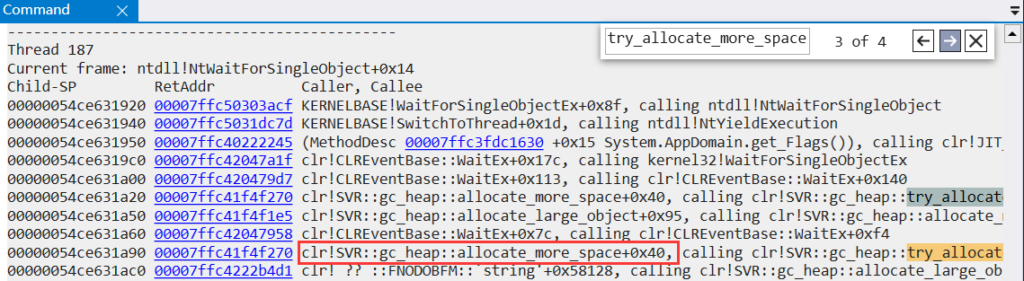

It can be seen from the figure that 40 threads are currently blocked, which is great. The problem is becoming clearer and clearer. Next, we will analyze which thread did what it shouldn’t do and cause the GC to be triggered. The same can be done. search try_allocate_more_space to determine which threads are allocated space.

I go, you can clearly see that the current 170and the 187number of threads that are allocated large object gc_heap::allocate_large_objecttriggers the GC, the large object heap itself is a daunting thing, its cleaning is very CPU-intensive recycling of resources, this is also a friend Speaking of CPU drops in about 1 minute the situation is relatively consistent.

4. Find the culprit

Now focus in on these two threads, I saw these two threads are of the same method at the stack, so here pick a 187 thread to analyze it, can !clrstack look it managed stack.

0:187> !clrstack

OS Thread Id: 0x1ef0 (187)

Child SP IP Call Site

00000054ce631e30 00007ffc4021bde2 System.String.FillStringChecked(System.String, Int32, System.String)

00000054ce631e70 00007ffc402222a8 System.String.ConcatArray(System.String[], Int32)

00000054ce631ed0 00007ffc40223528 System.String.Concat(System.String[])

00000054ce631f40 00007ffbe6dbdafb BLL.xxx.xxx.GetRowString(System.String, Boolean, Boolean, System.String, System.String, System.String, System.String, System.String, System.String, Int32, System.String, System.String, System.String, System.String, System.String, System.String, Int32, Int32, System.String, System.Nullable`1, System.Nullable`1, System.String, System.Nullable`1, System.Nullable`1, System.Nullable`1, System.Nullable`1, System.String, System.String, System.String, System.String, System.String ByRef)

00000054ce65cf40 00007ffbe6ab3187 BLL.xxx.xxx.ExpZB(System.String, Boolean, Boolean, System.String, System.String, System.String, System.String, Int32, System.String, System.String, System.String, Int32, System.String, System.String, System.String, System.String, System.String, System.String, Int32, Int32, System.String, System.Nullable`1, System.Nullable`1, System.String, System.Nullable`1, System.Nullable`1, System.Nullable`1, System.Nullable`1, System.String, System.String, System.String, System.String)

.NET performance analysis

From the stack point of view, looks like the use of System.String.Concatstitching string caused Goodfellas, string stitching so many years I do not know how many times to be attacked, there are still many people who stepped pit ???, in order to further verify that you can use !clrstack -p + !da -details xxxto see this What exactly is System.String[], simplified as follows:

0:187> !clrstack -p

OS Thread Id: 0x1ef0 (187)

00000054ce631e70 00007ffc402222a8 System.String.ConcatArray(System.String[], Int32)

PARAMETERS:

values (<CLR reg>) = 0x000001ea69e8d2f8

totalLength = <no data>

0:187> !da -details 0x000001ea69e8d2f8

Name: System.String[]

Size: 128(0x80) bytes

Array: Rank 1, Number of elements 13, Type CLASS

Element Methodtable: 00007ffc403d6948

[0] 000001f2391a83f0

Name: System.String

MethodTable: 00007ffc403d6948

EEClass: 00007ffc3fcd50e0

Size: 445950(0x6cdfe) bytes

File: C:\Windows\Microsoft.Net\assembly\GAC_64\mscorlib\v4.0_4.0.0.0__b77a5c561934e089\mscorlib.dll

String: xxxxx

.NET performance analysis

From the output information, we can see that String[]there are 13 elements in it. The largest string is 445950 bytes = 445kgreater than the 85k limit of the large object, so Concat here is the crux of the problem. The same is true for 170 threads. Next, I have to solve it. One question is: Why is there such a large string produced, and what exactly is done in the code? ? ? To find the answer, you have to export the source code from the dump to find out.

5. View the problem code

To analyze the problem of code, you can !ip2md + !savemodule export BLL.xxx.xxx.GetRowString method.

0:187> !ip2md 00007ffbe6dbdafb MethodDesc: 00007ffbe5342118 Method Name: BLL.xxx.xxx.GetRowString(System.String, Boolean, Boolean, System.String, System.String, System.String, System.String, System.String, System.String, Int32, System.String, System.String, System.String, System.String, System.String, System.String, Int32, Int32, System.String, System.Nullable`1<Int32>, System.Nullable`1<Int32>, System.String, System.Nullable`1<Single>, System.Nullable`1<Single>, System.Nullable`1<Single>, System.Nullable`1<Single>, System.String, System.String, System.String, System.String, System.String ByRef) Class: 00007ffbe52fe328 MethodTable: 00007ffbe53421d8 mdToken: 0000000006000096 Module: 00007ffbe471a890 0:187> !savemodule 00007ffbe471a890 E:\dumps\RowString.dll 3 sections in file section 0 - VA=2000, VASize=f7fcc, FileAddr=200, FileSize=f8000 section 1 - VA=fa000, VASize=3bc, FileAddr=f8200, FileSize=400 section 2 - VA=fc000, VASize=c, FileAddr=f8600, FileSize=200

.NET performance analysis

Then resorted to ILSpydecompile the code.

Boy, this really written ??, countless string concatenation, I feel genand LOHhave no time to allocate memory segment of ???, really killed the GC. . .

Three: Conclusion

In fact, this is a textbook-style problem, and there are textbook-style solutions, and I saw that this method has more than 600 lines of code, basically it is to do string splicing, and finally I will talk about the solution.

- Refactor the method, try to use StringBuilder instead of String to minimize the number of triggers due to this GC.

The last little easter egg seems to coincide with the deep suspicion of my friends. . .

reference: https://www.cnblogs.com/huangxincheng/p/14767250.html