(Thomson Reuters, for example, employs an information security policy aligned to the well-knownNIST Cybersecurity Framework, and Westlaw offers multi-factor authentication (MFA) and two-factor (2FA) authentication for secure user log-in. These are just some of the security features that consumers should look for when selecting a technology provider.)

In fact, given some of the psychological attributes commonly associated with lawyers a focus on detail, a desire for control, an aversion to risk the greater danger might very well be underutilization of, rather than overreliance upon, artificial intelligence.

For example, take ediscovery and predictive coding. When predictive coding first emerged, there was discussion and dispute over whether and how it could be used. But today there is widespread acceptance that predictive coding in general, and specific programs or platforms in particular, are sufficiently reliable to be used. (Thomson Reuters eDiscovery Point, for example, was very much designed with defensibility in mind.)

This will make the provision of legal services more efficient and more effective. It will benefit clients, who will receive better service in a more cost-effective manner, and it will benefit lawyers, who currently suffer from high rates of burnout, anxiety, depression, and addiction.

And AI is not new to the legal profession, as Dr. Chris Mammen, IP litigation partner at Hogan Lovells, points out:

In other ways, everything has changed. AI and other innovative technologies are creating, and will continue to create, novel situations that are not explicitly addressed in the rules of legal ethics and that the drafters of these rules never even imagined.

David Curle of Thomson Reuters puts it well: If lawyers are using tools that might suggest answers to legal questions, they need to understand the capabilities and limitations of the tools, and they must consider the risks and benefits of those answers in the context of the specific case they are working on.

But what are those biases, and are they fair? If the data used to train the AI contains unfair biases, then the results of the AI could be correspondingly biased.

No one expects you to understand exactly how the technology works, but legal professionals must have a basic understanding so that you are able to consider the benefits and risks.

And AI technologies might eventually not just be useful tools, but even essential tools, for attorneys. As David Curle puts it:

Part of the lawyers duty of competence involves keeping abreast of changes in law and in legal practice and these changes, in 2018, inevitably involve technology.

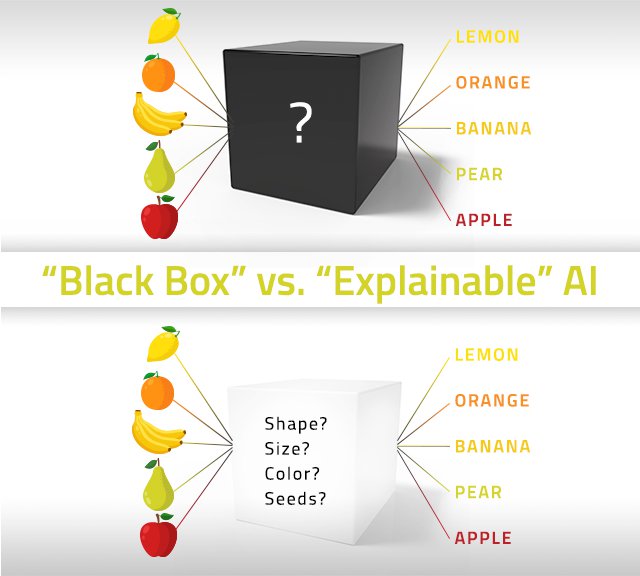

The effort to reduce unfair bias in AI tools finds expression in the movement toward explainable AI. As Tonya Custis explains, Theres a trend in the direction of explainable AI, where we develop the models to be clear about how they generate their answers. This is especially important in the legal field, where the customers want to know: what are the factors that went into a given decision? Why didWestlawrecommend this particular case?

Even when choosing the right solution, you must be mindful that there are some tasks that are not appropriate for handling by AI, as well as some tasks where it would be unethical not to use the technology and you must know how to tell them apart.

Who Should Be Responsible When A Self-Driving Car Crashes?Take our poll now »

The challenges that artificial intelligence pose to legal ethics, while significant, can be addressed and should be addressed, so lawyers can take advantage of the powerful tools driven by AI.

In the judicial system, one prominent example is judges making sentencing decisions based in part on AI-driven software that claims to predict recidivism, the likelihood of committing further crimes. There is concern over how the factors used in the algorithms of such software could correlate with race, which judges are not allowed to take into account when sentencing.

As lawyers rely more and more on AI and other technologies, and as those tools become more advanced and more complex, lawyers must be sure that they understand how those technologies work.

These chatbots can be very helpful to consumers, especially consumers who cannot afford the high cost of hiring a lawyer, and they could help bridge the yawning justice gap that exists in both the United States and around the world. But they do raise the issue of unauthorized practice of law, especially if the chatbot or other tool is created or maintained by an attorney.

Make sure the legal research work is being done competently

Depending on who (or what) a lawyer works with, the duty of competence includes a duty of supervision. As Chris Mammen of Hogan Lovells explains, If a lawyer delegates something to subordinates, whether junior lawyers or paralegals, theres an ethical duty to make sure the work has been done competently. And this duty extends to AI-based tools. One way of analyzing the issue is that the lawyer who reviews and signs off has appropriately supervised the AI.

Large numbers of lawyers dont take this duty to keep up with technology seriously enough, according to David Curle of Thomson Reuters. Its not just AI-based technology but even more mundane things like practice management platforms, and other tools that make it easier and more efficient to practice law.

All technologies, old or new from manual Bates stamps, to email, to AI are tools to support the practice of law and to help us advise our clients, says Chris Mammen of Hogan Lovells. We should make appropriate use of them to provide the best, most efficient, most cost-effective service to our clients.

We dont have rules and opinions that directly apply to these situations, according to ethics lawyer Megan Zavieh. We have to look at the spirit of the rules, and balance protecting the public with allowing for innovation in the delivery of legal services.

Artificial intelligence operates by looking for patterns with large amounts of data. This training of AI is, as Dr. Tonya Custis of Thomson Reuters puts it, a statistical process it will have biases.

Lawyers must therefore have a general understanding of technology and artificial intelligence. And they must also understand the general operation of the specific AI tools that they use in their own practices.

Say you have two guys working out of their garage in Silicon Valley. All of their friends are other guys. The technology they develop could look very different if only they had women on their team. A lot comes down to imagining whos going to use your product. Without diverse people on your team, you might not consider points of view or experiences that are obvious to those with different societal experiences.

In fact, explainability could become a legal requirement. In some jurisdictions, a right to explanation is starting to emerge.

Case Western: Ethical Implications of Legal Practice Technology

If the data used to train the AI contains unfair biases, then the results of the AI could be correspondingly biased. Find a provider with trusted data.

Were navigating murky ethical areas where the law and rules havent caught up yet with the technology, according to ethics and disciplinary lawyer Megan Zavieh. Were trying to apply rules that were written based on certain ways of practicing law and now trying to apply them to very different ways of working.

Algorithmic searches, when run across deliberately and consistently organized information such as the content on Westlaw, necessarily yield better results, Kruk adds. But note how attorneys work alongside technologists and RD to assess and validate results generated by algorithmic searches.

AI has the potential to transform the legal profession in so many positive ways, predicts ethics attorney Megan Zavieh. If we can start to push down the work that takes up too much of our time to AI products, much as weve done with other forms of technology in other areas, we can free up lawyer time to do the things we do best: the legal analysis and arguing in court that cant be replaced by robot lawyers.

Think of an AI system like Westlaw Research Recommendations. It plows through huge amounts of data to suggest the relevance of a case but the lawyer still has to decide that this case is actually relevant. The AI augments, but does not replace, the work of the lawyer.

AI requires data data about actions and decisions made by humans, explains David Curle. If you have a system thats reliant on hundreds of thousands or millions of human decisions, and those humans had biases, theres a risk that the same bias will occur in the AI.

Humans, for all our positive attributes, are fallible too, notes David Curle of Thomson Reuters. The machines have the advantage of their biases being more predictable and perhaps more easily remedied. Data scientists have been working on this problem and are in a position to clean up the biases in data. The biases baked into hundreds of thousands of years of human life are harder to root out.

Hundreds of staff attorney editors write, curate, organize, and revise legal content for Westlaw, according to Teri Kruk, Senior Director for Content Strategy and Editorial at Thomson Reuters Legal. Former law clerks, law review editors, practitioners from large and small firms, prosecutors all have chosen a path less travelled in the legal profession, but one that is critical to the continued life of the law and the profession.

As Hogan Lovells partner Chris Mammen notes, If all of the natural-language processing is done behind the law firm firewall, thats one thing. But if its being handled by a vendors server somewhere out there, how sure are you that what could be confidential or privileged information is not being placed in a context where it isnt adequately protected?

Perhaps the most widely discussed example of balancing the risks and rewards of artificial intelligence is the self-driving car. Far from being a rote exercise, programming an autonomous vehicle involves difficult choices that will generate extensive ethical and legal debate in the years ahead. In fact, these debates are already taking place, in the legislatures of the 40-plus states that have passed, or have considered passing, laws to govern self-driving cars.

We need to have some understanding of whats going into an AI tool and whats coming out of it, according to ethics lawyer Megan Zavieh, who represents lawyers facing disciplinary charges. Just as lawyers cant prove they satisfied their ethical duties simply by hiring an outside consultant, they similarly cant establish ethical compliance simply by using an AI tool.

Not All Legal AI is Created Equal Ebook

We dont need a crazy amount of additional time and energy to show our clients a lot more care and interest in their concerns. We need just a little more capacity, and AI is a great way to make that happen.

To make sure that AI tools are not unfairly biased, its important to have diverse teams working on these tools. Custis poses this hypothetical:

A lawyers duty of competence and diligence includes the duty to use tools and technology where appropriate. So at a certain point in time, a lawyer might have an ethical duty to affirmatively use AI, where that AI is accurate, reliable, and essential to serving the client effectively.

In other words, in order to survive and thrive in a challenging marketplace, lawyers of the future willneedto use AI.

In this webinar, David Curle of Thomson Reuters provides background information on artificial intelligence and discusses the many different ways that AI is being used in the legal world:

At the same time, lawyers are not programmers and the ethical rules recognize this, as David Curle notes: The current rules of professional responsibility are general enough to cover the situation. They suggest two things: that lawyers must understand enough about a new technology to see the risks, and that lawyers must understand enough to see the benefits.

To download the slides used in this webinar, enter your email here:

When we think of issues of client confidentiality and attorney-client privilege, we often think of confidences that clients share with their lawyers. But those confidences are also sometimes shared with AI-powered tools and lawyers need to ensure that client confidences remain secure at every stage of the process, especially in an age where every week seems to bring news of a new data breach.

At the end of the day, the legal profession is all about serving the client and theres no denying that AI is a powerful tool for client service.

Imagine, for example, a voice-activated personal assistant that can handle legal research questions (such as the RightsNOW App, a voice-activated legal information tool that just took top honors at the2018 Global Legal Hackathon). Its a great innovation, but it must also be a secure innovation.

Interestingly enough, in light of the whole will robots take our jobs fear, the role of human beings remains essential in developing AI. For example, consider Westlaw, which uses AI in a wide range of features, from Research Recommendations to Folder Analysis to Westlaw Answers.

Lawyers are not computer scientists or technologists, and nobody would expect them to appreciate the algorithm-level workings of AI systems. But at the same time, they must have some basic understanding of how the tools they utilize generally work.

On the one hand, everyone loves to talk about robot lawyers but on the other hand, weve been using AI in our practice in a variety of ways for years. Think of natural-language searching for online legal research, or the use of predictive coding in ediscovery.

For example, imagine training facial-recognition software on a group of people who come from only one racial or ethnic background, or training voice-recognition software using only male voices. The resulting AI tools will be biased not as inclusive as they should be, and not as useful either.

Take, for example, social media. The original rules governing lawyer advertising and client communication were drafted well before the age of Facebook, Twitter, and LinkedIn.

Artificial intelligence is transforming the legal profession and that includes legal ethics. AI and similar cutting-edge technologies raise many complex ethical issues and challenges that lawyers ignore at their peril.

And just as there are some tasks that a lawyer simply cannot delegate to a paralegal or legal assistant, there are some tasks that are not appropriate for handling by artificial intelligence and an attorney must know how to tell them apart.

The ethical issues raised by AI are in many ways not that different from the ethical issues that lawyers have faced before, saysDavid Curle, Director of the Technology and Innovation Platform at the Legal Executive Institute of Thomson Reuters. When using tools in their work, whether AI-powered tools or any others, lawyers still have the same duties, including duties of supervision and independent judgment.

What this means in practice is that lawyers need to find trusted providers of AI-based solutions, and they need to pose smart questions to the providers whose AI tools they are considering using. Lawyers need to understand, at a basic level, how the solutions work and how the solutions were developed.

In some ways, nothing has changed. The general ethical duties of lawyers remain constant across technologies.

Having worked in AI for the legal profession for a long time, I know how the customer base is conservative, says Tonya Custis of Thomson Reuters. With Westlaw natural-language searching, lawyers will ask, Why am I getting results that dont use the specific words I searched for? You need to explain to the customer how the process works.

Not All Legal AI is Created Equal Ebook

Lawyers operate within a network and a professional context. They must deal with colleagues, adversaries, courts, and regulators. And so even if an individual lawyer understands AI well, its important for the other actors in the system to understand AI as well and to have some sort of consensus about what AI is reliable and how AI can be appropriately used.

Case Western: Ethical Implications of Legal Practice Technology

One way of framing this issue is automation versus augmentation, states Dr. Tonya Custis, a Research Director at Thomson Reuters who leads a team of research scientists developing natural-language and search technologies for legal research. There may be some tasks that we shouldnt automate. For these tasks, AI can help attorneys do their jobs, but AI cant do their jobs completely. So the question becomes: where do we draw that line?

These technologies most definitely include AI which we might not even think about as a discrete technology in the future. Per Zavieh, We think AI is a huge thing right now. But in a few years, it wont be thought of as AI, and it will just be a useful tool.

According to David Curles2018 AI Predictions, legal professionals should be concerned about the ethical implications of the application ofAI technologiesto their practice, including confidentiality of hosted data used in AI applications and the risk of data breaches and risks related to confidentiality, privilege, and commingling of multiple clients data when using AI to analyze law firm billing data.

One way that we lawyers fall short as a profession is in our human interaction, Zavieh explains. Not enough lawyers are caring enough about their clients and their problems. As a result, we have not just a business-development problem but also an ethics problem: we need to be invested enough in our clients to put forth the effort thats required to see their matters through successfully.

More than 30 stateshave adopteda comment to the Model Rules of Professional Conduct making clear that [t]o maintain the requisite knowledge and skill, a lawyer should keep abreast of changes in the law and its practice, including the benefits and risks associated with relevant technology. And artificial intelligence is most definitely relevant technology. Indeed, as Erik Brynjolfsson and Andrew McAfee wrote in a cover story for the Harvard Business Review, AI is [t]he most important general-purpose technology of our era.

Lawyers Assess the Risks of Not Using AI

The ethical duty of competence requires being appropriately up to speed on technology, says Chris Mammen of Hogan Lovells. So AI is not something you can stick your head in the sand over, just as you couldnt try to conduct a document review in a major litigation entirely in paper.

When it comes to addressing bias in AI tools, a little awareness goes a long way. As Tonya Custis emphasizes, Its important that people practicing AI and training the models are aware of the biases in the tools and aware of how the models are getting implemented.

Just as lawyers can over-delegate work to subordinates, they can also under-delegate, causing them to serve their clients less efficiently. In the context of artificial intelligence, one can imagine underutilization of AI for example, a lawyer not using AI even though it could help that lawyer serve the client better.

And its not just a matter of lawyers having more time to write briefs or draft contracts its also about the human element.

I get calls from lawyers who have creative ideas for helping people with legal problems, and theyll tell me that they talked to three other ethics lawyers who told them no. Thats the risk-aversion of our profession at work. But there has to be a way to innovate and move forward, to help consumers in different ways, and to close the justice gap, while at the same time not getting into disciplinary trouble.

One of the most exciting developments in legal technology is therise of legal chatbots, AI-powered programs that interact with users who have legal issues by simulating a conversation or dialogue. These chatbots are now being used to do perform such tasks as fight parking tickets, advise victims of crimes, or draft privacy policies or non-disclosure agreements.

For artificial intelligence, one of the most notable issues is the black box challenge. A lawyer submits a query to an AI-powered tool, it goes into a black box, and the AI-based solution provides an answer. How much does a lawyer need to know about what goes on inside that black box?

Legal information and content is not fungible. Its creationwhether by the courts, legislatures, renowned authors, or in-house attorney editorsis deliberate, thoughtful, and part of a larger organism that is called the law.

Indeed, when it comes to rooting out unwanted bias, computers might have certain advantages over machines.

Artificial intelligence isnt perfect but neither are people. As David Curle puts it, The issue with AI is, accurate compared to what? Humans make mistakes too.

At the same time, AI also holds out the promise of helping lawyers to meet their ethical obligations, serve their clients more effectively, and promote access to justice and the rule of law. What does AI mean for legal ethics, what should lawyers do to prepare for these changes, and how could AI help improve the legal profession?

Artificial intelligence is another area where the rules of legal ethics are playing catch-up with the technology. Here are some of the ethical issues raised by AI.

Lawyers Assess the Risks of Not Using AI