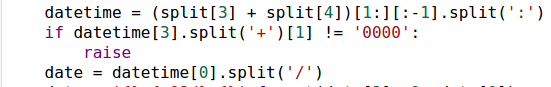

I got up to get another cup of coffee and when I was walking back to my desk, I had a really stupid thought:Since I know what the edge cases look like, can I just raise a runtime error if I see them?

About a week ago, [company name omitted], one of the largest and most well known tech companies, sent me one of these and I faced this exact situation. After working on the last problem for a bit, I had a solution that worked for eight out of nine test cases.Sigh.

Bingo, I knew which edge case the problematic input was and could skip writing the solutions for the other three.

Once again, thanks for reporting this. But well display the Runtime Error to make sure the product is easy for test solvers to solve. Well also work towards making better testcases, so that you are forced to take care of all the corner cases.

In this case, kudos on tracing out the corner case. For example, your idea of Since I know what the edge cases look like, can I just raise a runtime error if I see them? is not something all candidates might get during an online assessment.

His reasoning is understandable, but I still feel that they should just mark test cases with errors and incorrect. Still cool that the CTO himself responded.

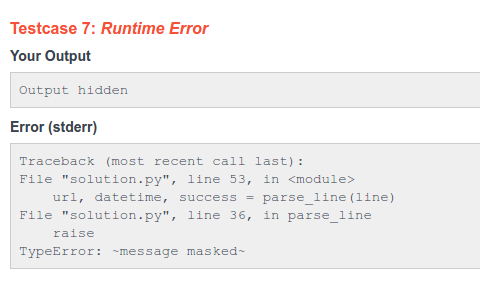

HackerRank does have functionality to mask the error message, so you arent able to write a custom exception to dump the exact input, but this is still a massive bug and something that should be addressed urgently. Ill be sending this to HackerRank and to the company that gave me the challenge.

HackerRanks CTO, Hari Karunanidhi, reached out to me:

HackerRank lets you see how many hidden test cases there are as well as how many hidden test cases that you have passed. This leads to many frustrating moments when you have written code that gives the correct output on every test case except for one.

then I scrolled down and I pressed the submit button:

Harishankaran Karunanidhi (HackerRank)

There are a few companies that have developed platforms that make it easier for companies to do this and by far the most popular is HackerRank. They give you a problem description at the top, a text editor to write and run code in below that and then an input to test on as well as the expected output for it.

After you write code that is able to solve the known input, the server then runs the code on inputs that you are not supposed to be able to see. This is to give companies a gauge on how well you are able to write code that is able to deal with edge cases that are not given to you.

I read the problem description again and identified four areas in which there could possibly be an edge case with one of them requiring a rewrite of the main data structure that I had used.

Thanks for sharing the feedback. We have a tough job of finding the right balance between having the test secure and also candidate friendly. For example, hiding the type of error would make it really frustrating for a developer to solve a challenge.

For those not in the tech space, or for those who are but havent had to interview recently, one of the most recent trends in recruiting is to send candidates a coding challenge. The idea is to screen out those who cant actually write code (apparently surprisingly common, see so that you dont have your engineers interviewing someone who is clearly not qualified.

Being lazy, I obviously did not want to do this. I really wanted to know what hidden input I was failing on so that I didnt have to add code for all four of these edge cases when they were just testing for one.

I really didnt expect this to work, but added the two lines of code to test it: